I’m not even going to pretend that there isn’t a conflict of interest here. A better, more ethical person would take steps to maintain their objectivity and protect their sense of integrity. But in a world where news anchor Brian Williams can singlehandedly drive the Taliban out of Afghanistan, and the US Supreme Court has declared that money is people, I will simply press on. In the immortal words of Brian Williams, once more unto the breach!

I Feel Your Pain

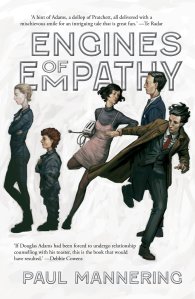

Recently I finished Engines of Empathy, a novel by my brother-in-law Paul Mannering. Paul was nice enough to gift me a copy when I was back in the auld country recently (but apparently, even as a family member, I didn’t fork over enough dolleros to get a signed copy. . .). Engines of Empathy is a piece of parodic speculative fiction in the tradition of writers like Pratchett and Fforde and takes place in a near future where humanity has discovered a new source of power. Cars, appliances, even entire buildings are now powered by something called “empathy energy.” This means that appliances, for example, can now sense and respond (usually negatively; this is still technology after all) to their owners emotions; owners, in their turn, need to “engage” with their appliances by being sensitive to the emotional states of their devices. Early in the novel some of the characters begin to suspect that empathy energy is not what it appears to be and, as they say, hi-jinks ensue.

The book is a good read, witty and thoughtful; one of my favorite aspects to it was that it clearly demonstrates a familiarity with the horrific world of customer service, which has inevitably grown up alongside a world whose increasing technical sophistication is fostering, as an almost inevitable corollary, increasing individual incompetence. Anyone who has ever had the misfortune to be trapped in the thankless world of CS will undoubtedly give heartfelt assent to the following: “Resolving technical issues over the phone to someone who can’t tell the difference between a monitor and a keyboard is a challenge comparable to conducting open-heart surgery using only a Pez dispenser while blindfolded and wearing welding gauntlets.” At the same time, however, Mannering is savvy about the many ways in which not only customer service, but the entire retail apparatus tries to manipulate us. Many of the interactions in the novel are framed by characters’ familiarity or ignorance of the many elaborate schools of vocal inflection and nuance, a system of manipulation and counter-strike that has all the complexity of an arcane philosophical system.

Yet what I most enjoyed about this novel was that it adhered to the one demand I have of speculative fiction, one that so many SpecFic writers often get wrong: the central speculative device has to be not just intriguing enough to suck you in, but resonant and thought-provoking enough to keep your interest. The good writers do this really well. There is, for example, Stephenson’s world of knowledge enclaves that are forbidden from interacting with one another in Anathem, or Mieville’s strange vision of two cities sharing the same physical space but invisible to one another in The City and the City. In each case the author takes something that has its roots in the everyday world (the overly specialized realms of academia, the kind of “unseeing” we routinely practice when we encounter the homeless or other people on mass transit) and twists it into something strange and discomfiting. In Engines of Empathy Mannering’s concept of devices driven by emotional energy is so engaging because it makes into a concrete concept what is already a metaphorical reality.

Human beings are demonstrating every day, in countless ways, that their most heartfelt desire is to have an intimate relationship with their machines.

Mannering’s world feels eerily familiar because it is one that we already inhabit without the aid of a mystical new energy source. In a larger sense this is nothing new in that we’ve always had a tendency to anthropomorphise. . .well, everything basically, from cars to guitars, from computers to guns. At minimum we assign many of these objects a gender, but we typically go much further than that and invest them with a personality and intent (go on, how many of you routinely act as if a recalcitrant computer or phone is actively working to frustrate you?).

Boys and Their Toys

I was reminded of Mannering’s novel recently when I finally got around to watching Caprica, the spin-off series of the re-imagined Battlestar Galactica that aimed to tell the story of the original creation of the Cylons and the rise of artificial intelligence. The slow and mannered approach of the series doomed it (like so many books I read the series desperately needed a good editor willing to stand up to the creators), but the show is intellectually engaging and thoughtful. Throughout the show, even those resistant to the idea of AI as sentient and who try to condemn machines as just “robots” or “tools” are lured into the world of anthropomorphism. Try as they might, they find it hard even to refer to a machine as an “it.” Other characters, meanwhile, are all too ready to invest personality into the devices and technological processes with which they interact. Thus the hapless young scientist Philomon is willing from the get-go to treat the Cylon prototype as a “she.” Philomon’s behavior seems both psychologically astute on the part of the writers and sociologically aware; a tendency to invest soul-less high tech devices with personalities has been a hallmark of male adolescence since adolescence was invented in the early twentieth-century.

Part of this adolescent male tendency to interpret things as having human qualities comes from the young male’s inability to control their world. Thus Philomon, stereotypically, is the nerd who can’t get a date, and like so many of his gender, seems to be turning to machines as a compensation for his inability to control real women (i.e. get them to like him). But the series is smarter than that, and positions Philomon’s actions as being about a lack of control more generally. He is relentlessly bullied by Dr. Greystone and his ideas are systematically disregarded. Even his other geeky lab-techs dismiss him.

There are some obvious links here with “Gamergate,” the sustained campaign of harassment and intimidation by a bunch of emotionally and intellectually stunted men upset that women have an opinion about videogames. I haven’t written much of anything about this controversy-that-isn’t-really-a-controversy, mainly because I made my thoughts about it pretty clear way back when the first opening salvos in the harassment campaign were directed at Jennifer Hepler and Anita Sarkeesian. And when I say that it isn’t a controversy I don’t mean that the behavior of the cyber-terrorists and stalkers isn’t appalling. But it is simply bringing out into the opening the festering underside of a development and marketing environment that has always treated women as second class citizens in the videogaming world. Yet, as I tried to point out in those earlier posts, there are some other things going on here apart from (but also mutually reinforcing) the rampant misogyny, not least of which is a culture of entitled players that developers themselves have largely been responsible for creating and nurturing. However, at its core, Gamergate is really all about a problem of technology control. Clearly the sociopaths who get their jollies out of terrorizing women for real and imagined slights are nurturing some pretty substantial personality disorders. But they are also right about one thing: their gaming world is changing, and it is changing in ways that will take that game culture out of their control.

While I have been extremely critical of those who would trade in the stereotype that all gamers are overweight mouth-breathers with poor social skills living in their parents’ basement, clearly you don’t threaten to rape someone or kill their family if you are a well-adjusted person, and there is, sadly, a small (although not as small as some would like to claim) minority of gamers for whom games are compensation for the inadquacies of their lives. The games themselves but, more importantly, the culture around those games, become those things you invest with heightened levels of reality and personality because that makes it all the more satisfactory when you seem to be exerting control over them, a kind of control you don’t possess in real life.

The behavior of the spoiled Gamergate brats might seem worlds a way from the concerns of most people, especially those who don’t play games. . .all two of you. It is, however, an extreme example of what happens when someone tries to take our toys–into which we’ve invested significant amounts of our psychological being–away from us. Consider the reaction of you and your friends when someone suggests that you cancel Facebook. Or do without your cellphone for a week (“”But. . .but. . .I need it for work!” “Dude, you are a barista.”).

Inappropriate Touching

Every so often I try to construct for myself a list that I think of as the “ultimate 5.” What would be the five essential books that I would like to have all my incoming freshman students read in their first-year at college? These are books that aren’t necessarily the greatest works of all time, or maybe even ones that will last, but simply the books that I think both provide information that would be useful at this point in time, and raise questions that they should at least think deeply about (even if, I am happy to accept, their answers to those questions may differ from my own). This will never, ever come to pass; belief in that function of education is no longer one that has much currency at most universities (all colleges pay lip-service to it, but their curricula and their degree requirements say otherwise). It remains, however, a fun exercise, a chance to think again about what I have read recently and test it against some old favorites.

One book that has been on that list ever since I read it in 2011 is Sherry Turkle’s Alone Together: Why We Expect More from Technology and Less from Each Other. Turkle is a professor at MIT and has been studying user interactions with technology for decades. She was in fact one of the early enthusiasts for the transformative possibilities of the brave new world of cyberspace and her early book Life on the Screen (1995) greatly influenced my own thinking about the Internet as a tool for extending our real-world identities. What is so striking about her recent work is that she extensively revises much of her early thinking, admitting in fact that she was wrong in some of her early optimism. Nor is her later work abstractly theoretical. The nature of her work at MIT involves a lot of empirical study and her insights are gleaned from years of conducting experiments to examine how people engage with computers, software, robots, even animatronic toys. You could dismiss her arguments out of hand, but few people have an evidentiary base comparable to hers.

What she has found, a trend that she sees accelerating, is that normal, sane, healthy, well-adjusted people increasingly express not just a willingness to establish an emotional relationship with a machine, but that they overwhelmingly prefer those relationships to ones with real people.

This is not how it was all supposed to be. Back when Turkle’s first book came out there was a lot of buzz about virtual reality, and in that distant, more innocent time there were a couple of key characteristics of virtual reality that people were excited about. VR (we’ve all seen the pictures, and yes, they were laughable even then, of people wearing bulky headsets and enormous gloves flailing around like someone in the middle of a Peyote-fueled vision quest) was going to be about creating a new way of interacting with the space around you (think Star Trek’s holodeck as the idealized version) and it was going to be about sex. Being humans, when someone invents a new technology, the first thing that people want to do with it is use it to sell sex. Five minutes after photography was invented someone was already passing around pictures of a naked woman in a compromising position with a goat. So one day, we were told, when VR is “mature”, we were all going to be wearing full-body suits with electrodes and numerous anatomically correct protruberances and cavities that would allow us to experience full-body sex with someone at a distance. There was even a fetching technical name for this: teledildonics. Sadly, the kind of garments that would require an entirely new laundry industry went the way of the jetpack.

It is, however, worth noting a couple of things about that early vision. VR was going to be about changing the way we interact with space around us, and the technology itself was (supposedly) going to disappear, to become simply an intermediary that facilitated an intimate engagement with other people.

So we return from our swirly flashback sequence to a world where the closest thing we have to VR is the dire Google Glass, where VR is designed to keep the space around us at bay, to refuse it; the world itself disappears, and technology becomes a means of organizing and managing relationships, disconnecting from those around us while “connecting” with other similarly disconnected souls. As a bonus, it allows us to enhance our learned self-phone behavior: wandering the streets making inappropriate comments out loud and bumping into things, behavior that anyone prior to the invention of the mobile phone would have seen as categorical evidence of insanity.

There is a lot of complexity in Turkle’s work which I am not even going to attempt to summarize here. Read it for yourself! But the book raises the obvious question:

In the name of all that is holy, why would people prefer relationships with machines to those with people?

This again is why Caprica is pretty smart because the name of all that is holy does have a lot to do with it. The role that warring polytheistic and monotheistic frameworks played in Battlestar Galactica is well-known, and that plays out in Caprica as well. But it is the patently inadequate ability of religion to deal with human loss and grieving that in the series gives rise to the technology that will in later years form the basis of the Cylon resurrection ability. Of course, as the events of the series make clear, it isn’t necessarily the case that the technology of after-life avatars is any better a way of dealing with grief and loss; rather, it can be sold to people as a better way, and they will buy that fantasy. Perhaps not surprisingly, given this cluster of issues, Mannering’s novel also features religion and religious marketing as a central element in a world dominated by feeling machines.

Yet in Caprica’s “V-Clubs,” virtual domains where young people (mainly) go to re-make their identities and revel in everything that isn’t permissible in the real world, that we see the seeds of an answer to the puzzling result of Turkle’s research, that people feel a powerful need not only to personalize their technologies but prefer the intimacy of technology to real-life intimacy.

Life is tough. For many people it is actually quite shitty and hellacious. That has probably always been true of human existence. But what we find increasingly galling is the widening gap between life as we live it, and the life the powerful marketing and consumerist juggernaut that surrounds us and from which there is no escape, tells us that we ought to be living. That is a life of ease, efficiency, convenience, safety, and personal empowerment. Technology was supposed to be the access point to that life. A little reflection shows that to be a crock. Things make some aspects of our lives better, many aspects more challenging. The most challenging aspect of our lives, however, is that we are forced to deal with other people. Now Satre obviously knew what he was talking about when he described hell as other people, a concept he fleshed out in his play Hui Clos. What makes other people hellish denizens however is that they are annoying evidence of the cognitive dissonance between what our life is and the way we are told it should be. Engaging with people can be difficult, inefficient, inconvenient, occasionally dangerous, and soul-sapping. We can take steps to try and mitigate all of this, of course. Many of our buildings and neighbourhoods are segregated to that we won’t have to deal with people whose race, social class, or age offends our sensibilities. Unfortunately, even after that cut is made, the people who are left still insist upon being people: difficult, inefficient, etc.

So in desperation we turn to the same technology that was supposed to make things better. It isn’t just the case that so many of our technologies are sold to us as life-management devices (and that term is occasionally used) but rather that what they are explicitly designed to manage is other people. On Facebook we manage our connections, our phones allow us to manage our contacts, Twitter allows us to manage our followers. They promise to make interacting with people everything that it isn’t in real life (easy, efficient, safe, etc.). Except they don’t really work.

Because there is a terrible irony that underlies Mannering’s novel and Turkle’s research: when you have engines powered by empathy, to use Mannering’s term, they actually destroy empathy for real human beings. Even a passing encounter with an embodied human is an information-dense experience (I’m not romanticizing; that density can be aggravating as often as stimulating). By contrast, even people on FB that I know really well and like a lot always seem to be thin, de-natured entities performing unconvincing versions of themselves. The technology doesn’t increase my understanding and empathy for them as individuals; it rather makes me more impatient with them, repeatedly demonstrates the many ways in which they are not individuals with their own personalities but simply products of their culture (“Look! I’m being creative and individualistic by following the same meme that 10,000 other people have already posted to FB!”), and presents ideas that I would see as being an interesting part of their overall make-up as superficial cliches because I now have irrefutable evidence that everyone else in my FB feed thinks the same thing. Being presented with unmistakable evidence of what people are like in the aggregate destroys our appreciation for what they are like as an individual. Of course, this too is all a lie: I have no real sense of what “the aggregate” is, since programs like FB only allow us to present ourselves in those ways that are the easiest to represent and to collect and manage for marketing purposes. Or at least that is what I am telling myself at the moment, because part of me really doesn’t want to believe that people are as appallingly self-involved, desperate for affirmation, and as obsessed with their own awesomeness as technologies like FB seem to indicate they are.

The end of Engines of Empathy would make a fitting last word to this discussion, but I’m not going to spoil the pleasure of discovering it for yourself. So I will come back to Caprica instead. There is a scene where Philomon is putting the Cylon prototype, unbeknownst to him inhabited by the avatar of Dr. Greystone’s dead daughter Zoe, through a series of diagnostics. He looks up and for a moment the robot’s movements make it look as if it is dancing. Philomon begins dancing with the machine, losing himself in the moment. But then he comes back to himself, looks at the diagnostic screens, at the robot, and sees that the robot’s motions are just that: pre-programmed sequences of movements. This scene shows the yearning for an intimate human-machine relationship, a yearning that can make us temporarily overlook the fact that the machine is a machine. It also shows us something else, however, something that Battlestar Galactica explored to good effect What we really want from our machine-human relationships is the one thing that we can’t have.

We want a machine that is human.

So you may think I’m barking up the wrong tree. You may think an MIT Professor is also barking up that same wrong tree with me. But just ask yourself this: how much time have you spent today staring attentively at a screen, and how much time staring attentively at a person? How much time have you spent fondly caressing your iPad, and how much time fondly caressing your partner?

Reblogged this on $100 Dialysis and commented:

My brother-in-law English lit professor shamelessly writing about a recent NZ sci fi book by his brother-in-law who’s not me…

Reblogged this on Paul Mannering – Books and commented:

Mark Mullen writes a fascinating essay on humanity’s relationship with technology, and reviews Engines of Empathy along the way.